Variational Autoencoder on the MNIST Dataset

Expertise: Probabilistic learning, variational autoencoders with Bayes, deep learning.

Skills: Julia, Flux, Zygote, MNIST dataset.

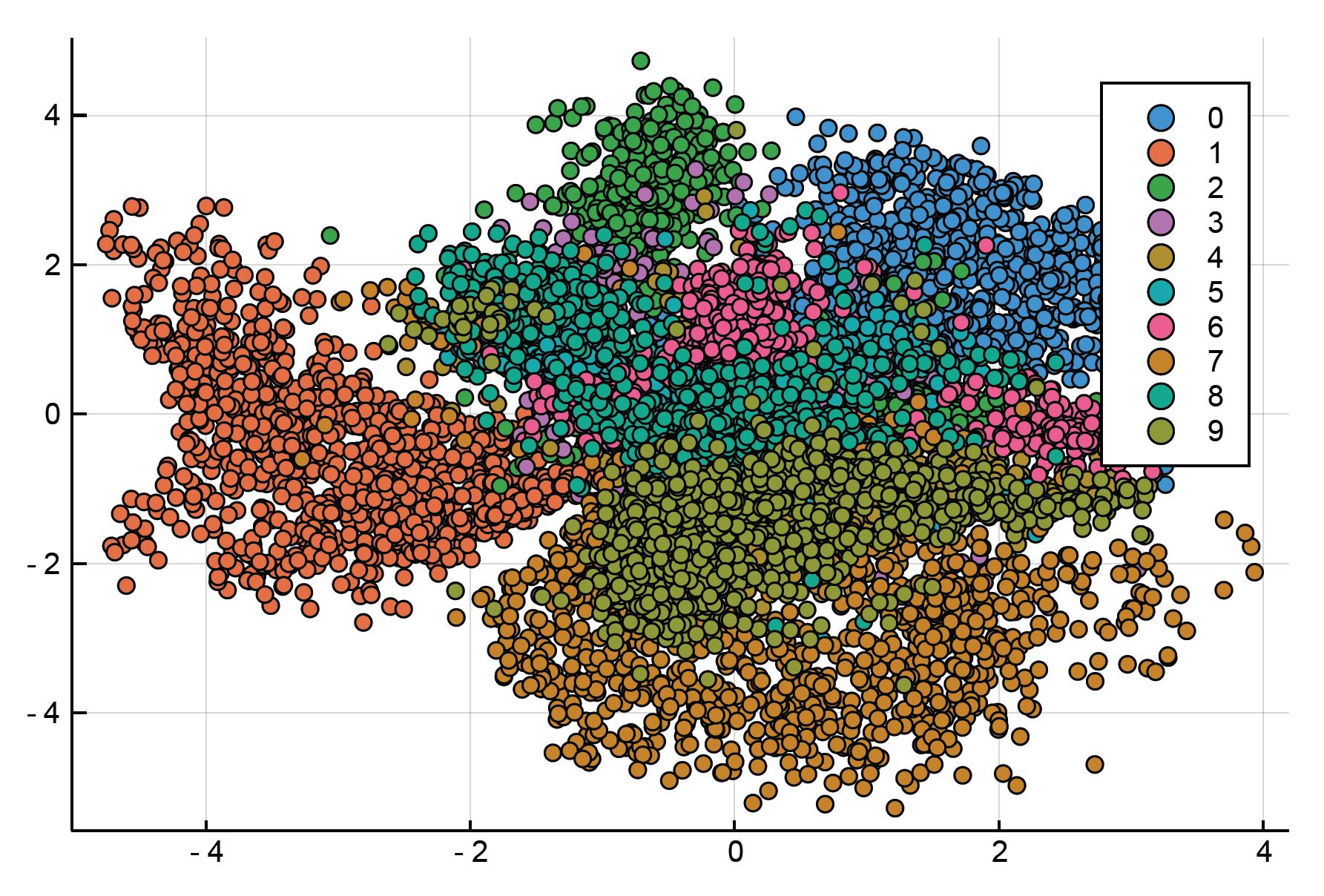

This project reproduces and extends the results of Kingma and Welling (2013) implementing a Variational Autoencoder (Auto-Encoding Variational Bayes) to reconstruct MNIST digits. The MNIST dataset contains images of digits from 0 to 9.

Th code for this project is hosted on GitHub at github.com/amrmsab/variational_autoencoder_MNIST.

Benefit 1: Autoencoders can represent the figures in a reduced latent space that allows us to visualize the distinction between the digits' images.

Benefit 2: Autoencoders can help us generate more digit images.

Benefit 3: Autoencoders can help us reconstruct incomplete images.